Spark installation on AWS EC2 instance

If you followed my previous guide probably you will already have installed Jupyter Notebook running on a EC2 instance on the aws cloud. This is the second part.

Apache Spark

“Apache Spark” is an open-source cluster-computing framework. Spark provides an interface for programming entire clusters with implicit data parallelism and fault tolerance. To explain the term “cluster” in plain language imagine a group of computers working like “slaves” under the guidance and instructions of a “master” computer. We often call these computers “nodes”. So the master node is responsible to manage a “queue” of jobs (tasks) and assign each task to an available slave node. That way, we achieve data parallelism and rapid big data processing.

Spark on AWS (or any other cloud platform) offers the option to spin up easily computer instances (they are called “EC2” instances) and let them work as slaves under the guidance of a master node (in Spark we call the master “driver”).

To work with Python and Spark together we have to install PySpark on Jupyter Notebook. With the previous part we installed the Jupyter Notebook. The following Spark installation will be a stand alone installation, but it will give you an understanding of how things work.

Java and Scala

-Spark requires Scala to be installed and of course Scala runs on top of Java. So lets update our ubuntu EC2 server and then install Java:

sudo apt-get update -y

sudo apt-get install default-jre -y

-Check java version:

java -version

-Install Scala

sudo apt-get install scala -y

-Check scala version:

scala -version

Install py4j with anaconda version of pip

-Append Anaconda binary path to our PATH variable:

export PATH=$PATH:$HOME/anaconda3/bin

-Install conda version of pip

conda install pip

type “y” for yes on the question

-Check which pip.

which pip

![]()

If the result includes “anaconda3/bin/pip” then we are fine:

-Install python for java “py4j”

pip install py4j

Download and install Spark

-Download Spark to “/home/ubuntu” directory

cd ~

wget http://apache.mirrors.tds.net/spark/spark-2.3.1/spark-2.3.1-bin-hadoop2.7.tgz

-Extract the “tarball”

tar -xzvf spark-2.3.1-bin-hadoop2.7.tgz

Export paths

export SPARK_HOME=’/home/ubuntu/spark-2.3.1-bin-hadoop2.7′

export PATH=$SPARK_HOME:$PATH

export PYTHONPATH=$SPARK_HOME/python:$PYTHONPATH

Run jupyter notebook and check spark

-Run Jupyter. On your terminal type:

jupyter notebook

-Check if Spark works: Open a new tab on your browser and go to the public DNS page of your server followed by colon and port 8888 “:8888”

https://<public_DNS_of_EC2> :8888

-Open a new notebook:

-On the first cell write :

from pyspark import SparkContext

sc = SparkContext()

then hit Shift+Enter. If it runs without errors and you see number “1” next to the cell, then everything is set up and Spark works under python’s Jupyter Notebook!!!

Close Jupyter Notebook and Terminate the EC2 instance

-Close the Jupyter Notebook by the combination “ctrl + C” on your terminal

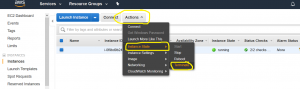

–Do not forget to terminate your aws EC2 instance.